our projects

AI-POWERED LABELING FOR ADAS

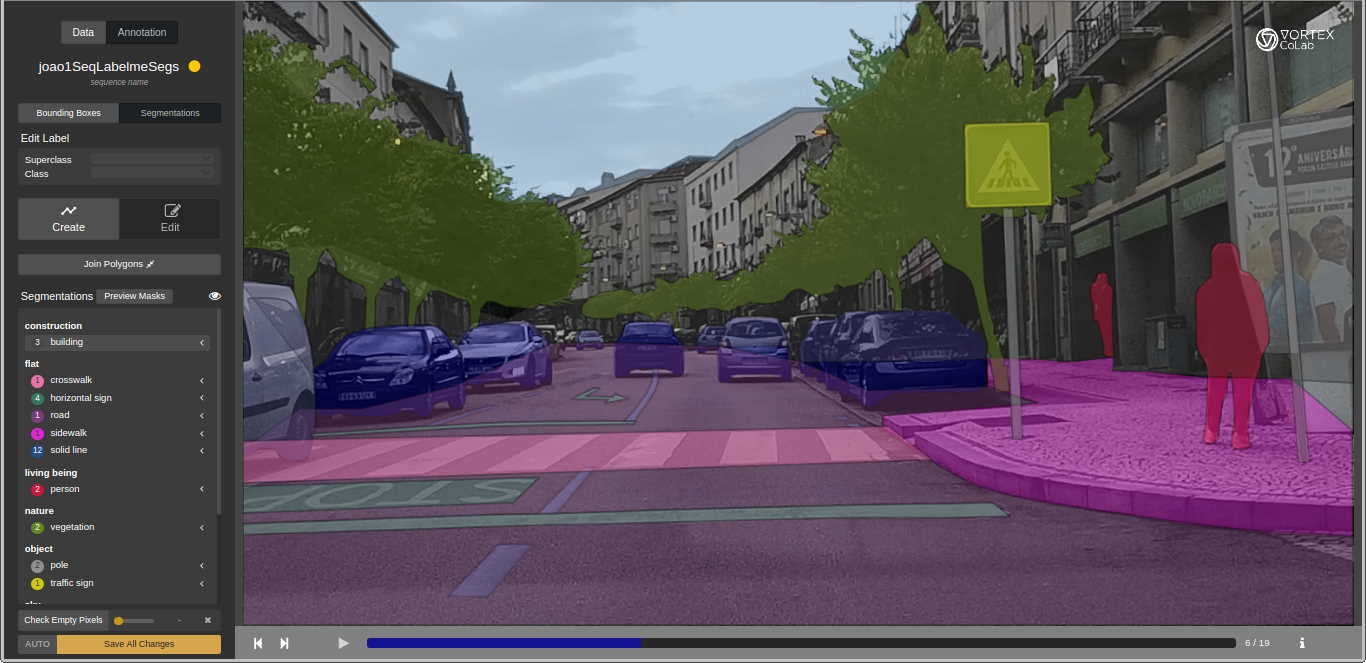

Semi-automated labeling tool powered with Artificial Intelligence to address the challenge of ground truth measurements in ADAS applications

A critical aspect in creating autonomous driving systems is the use of robust AI-based perception models. For this purpose, development teams need access to enough representative labeled data to create an accurate learning paradigm.

An acceptable performance of these models, specifically in deep learning computer vision applications, comes at a large data cost given the scale in the tenths of thousands of required ground truth labels for training and validating them.

Depending on the image or video understanding task, the required annotations may range from tags at the image level (image classification), to bounding boxes (object detection) or pixel-level annotations (image segmentation).

However, large-scale annotation of image datasets is often prohibitively expensive and time-consuming as it usually requires a huge number of worker hours through manual labeling to obtain high-quality results.

OUR APPROACH

- Working MVP of a web-based interface semi-automatic annotation tool

- Annotation types: 2D Bounding Boxes, Polygons, Segmentation, Object Tracking for camera and point cloud data

- Combination of Ai algorithms and sensor fusion for automatic classification proposals of multiple classes

- Compatible with Tensorflow and Pytorch models

- Image and point cloud compatibility

- ROS framework backbone, for module maintainability and scalability

- Video, Image and ROS bag files upload

KEY BENEFITS

- Support our partners with high quality labeled data

- AI-assisted annotation tool speed up productivity of annotators multifold

- Maintain high quality while driving productivity

- UI designed to minimize time per click, frame navigation, etc.